Want to know microsoft 70 463 features? Want to lear more about 70 463 training kit pdf experience? Study 70 463 pdf. Gat a success with an absolute guarantee to pass Microsoft 70-463 (Implementing a Data Warehouse with Microsoft SQL Server 2012) test on your first attempt.

Free demo questions for Microsoft 70-463 Exam Dumps Below:

NEW QUESTION 1

HOTSPOT

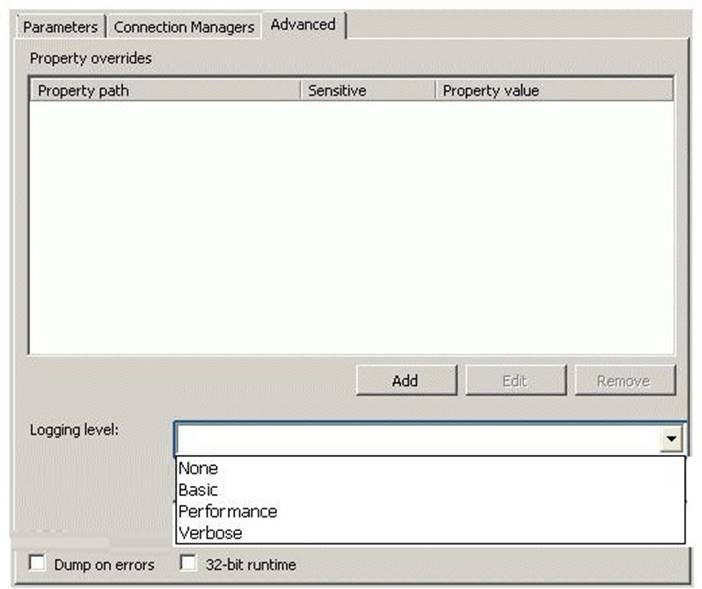

You are developing a SQL Server Integration Services (SSIS) package.

OnError and OnWarning events must be logged for viewing in the built-in SSIS reports by using SQL Server Management Studio.

You need to execute the package and minimize the number of event types that are logged. Which setting should you use? (To answer, change the appropriate setting in the answer area.)

Answer:

Explanation: Ref: http://msdn.microsoft.com/en-gb/library/hh231191.aspx

NEW QUESTION 2

You are implementing a SQL Server Integration Services (SSIS) package that imports Microsoft Excel workbook data into a Windows Azure SQL Database database. The package has been deployed to a production server that runs Windows Server 2008 R2 and SQL Server 2012.

The package fails when executed on the production server.

You need to ensure that the package can load the Excel workbook data without errors. You need to use the least amount of administrative effort to achieve this goal.

What should you do?

- A. Create a custom SSIS source component that encapsulates the 32-bit driver and compile it in 64- bit mode.

- B. Install a 64-bit ACE driver and execute the package by using the 64-bit run-time option.

- C. Execute the package by using the 32-bit run-time option.

- D. Replace the SSIS Excel source with a SSIS Flat File source.

Answer: C

Explanation: * See step 3 below.

To publish an Excel worksheet to Azure SQL Database, your package will contain a Data Flow Task, Excel Source task, and ADO NET Destination.

1) Create an SSIS project.

2) Drop a Data Flow Task onto the Control Flow design surface, and double click the Data Flow Task.

3) Drop an Excel Source onto the Data Flow design surface.

Note When using the Excel Source task on a 64-bit machine, set Run64BitRuntime to False.

*I ncorrect:

Not D: The Flat File source reads data from a text file. The text file can be in delimited, fixed width, or mixed format.

NEW QUESTION 3

You are installing the Data Quality Server component of Data Quality Services.

You need to provision the hardware and install the software for the server that runs the Data Quality Server.

You must ensure that the minimum Data Quality Server prerequisites are met. What should you do?

- A. Install SQL Server 2012 Database Engine.

- B. Install Microsoft SharePoint Server 2010 Enterprise Edition with PowerPivot.

- C. Make sure the server has at least 4 GB of RAM.

- D. Install Microsoft Internet Explorer 6.0 SP1 or later.

Answer: A

Explanation: Data Quality Server Minimum System Requirements

* SQL Server 2012 Database Engine.

* Memory (RAM): Minimum: 2 GB Recommended: 4 GB or more

Note: SQL Server Data Quality Services (DQS) is a new feature in SQL Server 2012 that contains the following two components: Data Quality Server and Data Quality Client.

NEW QUESTION 4

You are developing a SQL Server Integration Services (SSIS) project that copies a large amount of rows from a SQL Azure database. The project uses the Package Deployment Model. This project is deployed to SQL Server on a test server.

You need to ensure that the project is deployed to the SSIS catalog on the production server. What should you do?

- A. Open a command prompt and run the dtexec /dumperror /conn command.

- B. Create a reusable custom logging component and use it in the SSIS project.

- C. Open a command prompt and run the gacutil command.

- D. Add an OnError event handler to the SSIS project.

- E. Open a command prompt and execute the package by using the SQL Log provider and running the dtexecui.exe utility.

- F. Open a command prompt and run the dtexec /rep /conn command.

- G. Open a command prompt and run the dtutil /copy command.

- H. Use an msi file to deploy the package on the server.

- I. Configure the SSIS solution to use the Project Deployment Model.

- J. Configure the output of a component in the package data flow to use a data tap.

- K. Run the dtutil command to deploy the package to the SSIS catalog and store the configuration in SQL Server.

Answer: I

Explanation: References:

http://msdn.microsoft.com/en-us/library/hh231102.aspx http://msdn.microsoft.com/en-us/library/hh213290.aspx http://msdn.microsoft.com/en-us/library/hh213373.aspx

NEW QUESTION 5

You are designing a data warehouse with two fact tables. The first table contains sales per month and the second table contains orders per day.

Referential integrity must be enforced declaratively.

You need to design a solution that can join a single time dimension to both fact tables. What should you do?

- A. Join the two fact tables.

- B. Merge the fact tables.

- C. Change the level of granularity in both fact tables to be the same.

- D. Partition the fact tables by da

Answer: D

NEW QUESTION 6

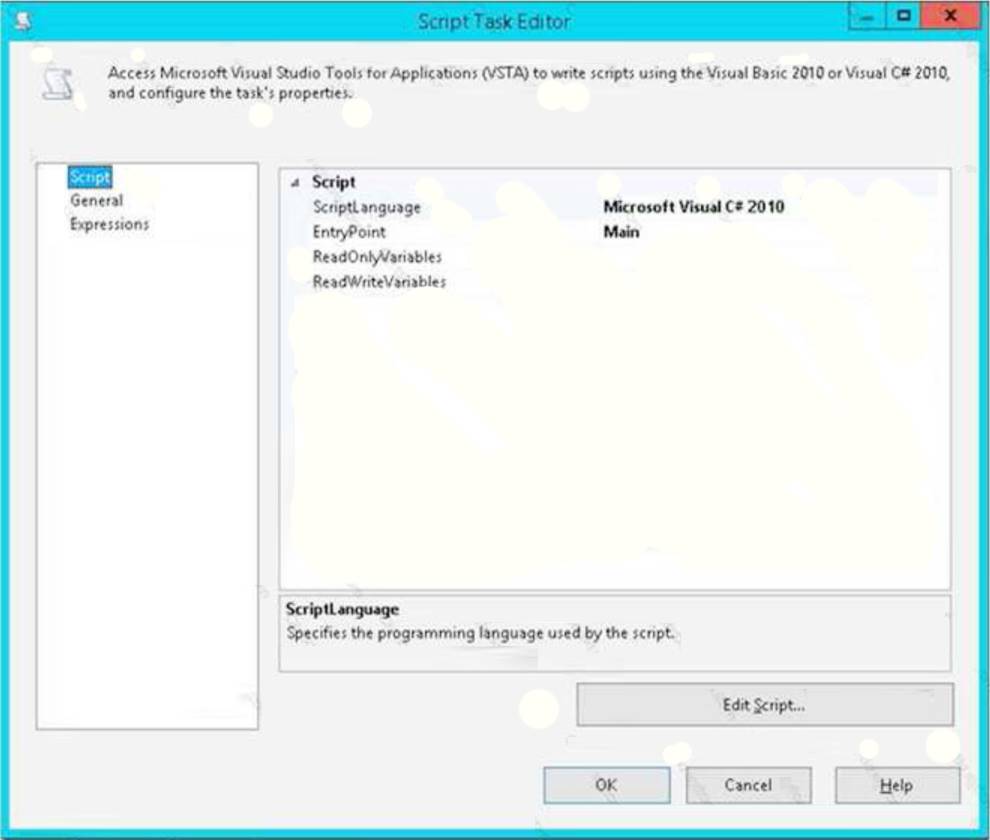

You have a SQL Server Integration Services (SSIS) package. The package contains a script task that has the following comment.

// Update DataLoadBeginDate variable to the beginning of yesterday The script has the following code.

Dts.variables[“User::DataLoadBeginDate’’].Value = DataTime.Today.AddDays(-1); The script task is configured as shown in the exhibit. (Click the Exhibit button.)

When you attempt to execute the package, the package fails and returns the following error

message: ‘’Error: Exception has been thrown by the target of an invocation.’’ You need to execute the package successfully.

What should you do?

- A. Add the dataLoadBegin Date variable to the ReadOnlyVariables property.

- B. Add the DataLoadBeginDate variable to the ReadWriteVariables property.

- C. Modify the entry point of the script.

- D. Change the scope of the DataLoadBeginDate variable to Packag

Answer: B

Explanation: You add existing variables to the ReadOnlyVariables and ReadWriteVariables lists in the Script Task Editor to make them available to the custom script. Within the script, you access variables of both types through the Variables property of the Dts object.

References:

https://docs.microsoft.com/en-us/sql/integration-services/extending-packages-scripting/task/usingvariables- in-the-script-task?view=sql-server-2021

NEW QUESTION 7

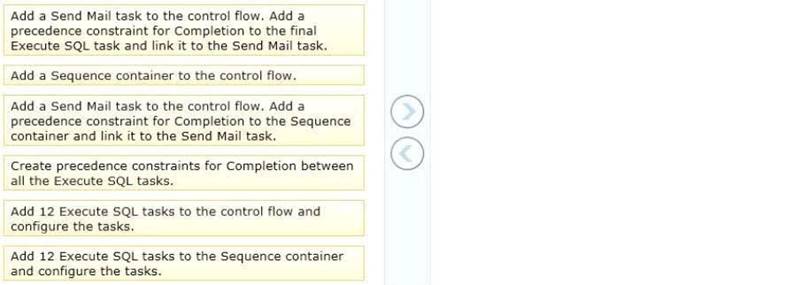

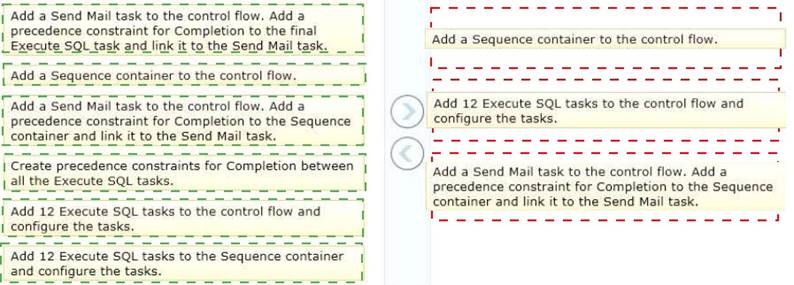

DRAG DROP

You are designing a SQL Server Integration Services (SSIS) package to execute 12 Transact-SQL (T-SQL) statements on a SQL Azure database. The T-SQL statements may be executed in any order. The T-SQL statements have unpredictable execution times.

You have the following requirements:

• The package must maximize parallel processing of the T-SQL statements.

• After all the T-SQL statements have completed, a Send Mail task must notify administrators.

You need to design the SSIS package. Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Answer:

Explanation:

NEW QUESTION 8

You are designing a SQL Server Integration Services (SSIS) data flow to load sales transactions from a source system into a data warehouse hosted on Windows Azure SQL Database. One of the columns in the data source is named ProductCode.

Some of the data to be loaded will reference products that need special processing logic in the data flow.

You need to enable separate processing streams for a subset of rows based on the source product code.

Which Data Flow transformation should you use?

- A. Multicast

- B. Conditional Split

- C. Destination Assistant

- D. Script Task

Answer: B

Explanation: We use Conditional Split to split the source data into separate processing streams.

A Script Component (Script Component is the answer to another version of this question) could be used but this is not the same as a Script Task.

NEW QUESTION 9

Which of the following T-SQL functions is not very useful for capturing lineage information?

- A. APP_NAME()

- B. USER_NAME()

- C. DEVICE_STATUS()

- D. SUSER_SNAME()

Answer: C

NEW QUESTION 10

You develop a SQL Server Integration Services (SSIS) package in a project by using the Project Deployment Model. It is regularly executed within a multi-step SQL Server Agent job.

You make changes to the package that should improve performance.

You need to establish if there is a trend in the durations of the next 10 successful executions of the package. You need to use the least amount of administrative effort to achieve this goal.

What should you do?

- A. After 10 executions, view the job history for the SQL Server Agent job.

- B. After 10 executions, in SQL Server Management Studio, view the Execution Performance subsection of the All Executions report for the project.

- C. Enable logging to the Application Event Log in the package control flow for the OnInformation even

- D. After 10 executions, view the Application Event Log.

- E. Enable logging to an XML file in the package control flow for the OnPostExecute even

- F. After 10 executions, view the XML file.

Answer: B

Explanation: The All Executions Report displays a summary of all Integration Services executions that have been performed on the server. There can be multiple executions of the sample package. Unlike the

Integration Services Dashboard report, you can configure the All Executions report to show executions that have started during a range of dates. The dates can span multiple days, months, or years.

The report displays the following sections of information.

* Filter

Shows the current filter applied to the report, such as the Start time range.

* Execution Information

Shows the start time, end time, and duration for each package execution.You can view a list of the parameter values that were used with a package execution, such as values that were passed to a child package using the Execute Package task.

NEW QUESTION 11

You are developing a SQL Server Integration Services (SSIS) project by using the Project Deployment Model. All packages in the project must log custom messages.

You need to produce reports that combine the custom log messages with the system-generated log messages. What should you do?

- A. Use an event handler for OnError for the package.

- B. Use an event handler for OnError for each data flow task.

- C. Use an event handler for OnTaskFailed for the package.

- D. View the job history for the SQL Server Agent job.

- E. View the All Messages subsection of the All Executions report for the package.

- F. Store the System::SourceID variable in the custom log table.

- G. Store the System::ServerExecutionID variable in the custom log table.

- H. Store the System::ExecutionInstanceGUID variable in the custom log table.

- I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

- J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow,

- K. Deploy the project by using dtutil.exe with the /COPY DTS option.

- L. Deploy the project by using dtutil.exe with the /COPY SQL option.

- M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

- N. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_project stored procedure.

- O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

- P. Create a SQL Server Agent job to execute theSSISDB.catalog.create_execution and SSISDB.catalog.start_execution stored procedures.

- Q. Create a table to store error informatio

- R. Create an error output on each data flow destination that writes OnError event text to the table.

- S. Create a table to store error informatio

- T. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: G

NEW QUESTION 12

HOTSPOT

You are the Master Data Services (MDS) administrator at your company.

An existing user must be denied access to a certain hierarchy node for an existing model. You need to configure the user's permissions.

Which user management menu item should you select? (To answer, configure the appropriate option or options in the dialog box in the answer area.)

Answer:

Explanation:

NEW QUESTION 13

You are performance tuning a SQL Server Integration Services (SSIS) package to load sales data from a source system into a data warehouse that is hosted on Windows Azure SQL Database. The package contains a data flow task that has 10 source-to-destination execution trees.

Only five of the source-to-destination execution trees are running in parallel. You need to ensure that all the execution trees run in parallel.

What should you do?

- A. Add OnError and OnWarning event handlers.

- B. Create a new project and add the package to the project.

- C. Query the ExecutionLog table.

- D. Add an Execute SQL task to the event handler

Answer: A

NEW QUESTION 14

You are developing a SQL Server Integration Services (SSIS) project with multiple packages to copy data to a Windows Azure SQL Database database.

An automated process must validate all related Environment references, parameter data types, package references, and referenced assemblies. The automated process must run on a regular schedule.

You need to establish the automated validation process by using the least amount of administrative effort.

What should you do?

- A. Use an event handler for OnError for the package.

- B. Use an event handler for OnError for each data flow task.

- C. Use an event handler for OnTaskFailed for the package.

- D. View the job history for the SQL Server Agent job.

- E. View the All Messages subsection of the All Executions report for the package.

- F. Store the System::SourceID variable in the custom log table.

- G. Store the System::ServerExecutionID variable in the custom log table.

- H. Store the System::ExecutionInstanceGUID variable in the custom log table.

- I. Enable the SSIS log provider for SQL Server for OnError in the package control flow.

- J. Enable the SSIS log provider for SQL Server for OnTaskFailed in the package control flow.

- K. Deploy the project by using dtutil.exe with the /COPY DTS option.

- L. Deploy the project by using dtutil.exe with the /COPY SQL option.

- M. Deploy the .ispac file by using the Integration Services Deployment Wizard.

- N. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_project stored procedure.

- O. Create a SQL Server Agent job to execute the SSISDB.catalog.validate_package stored procedure.

- P. Create a SQL Server Agent job to execute theSSISDB.catalog.create_execution and SSISDB.catalog.start_execution stored procedures.

- Q. Create a table to store error informatio

- R. Create an error output on each data flow destination that writes OnError event text to the table.

- S. Create a table to store error informatio

- T. Create an error output on each data flow destination that writes OnTaskFailed event text to the table.

Answer: N

NEW QUESTION 15

You are installing SQL Server Data Quality Services (DQS).

You need to give users belonging to a specific Active Directory group access to the Data Quality Server.

Which SQL Server application should you use?

- A. Data Quality Client with administrative credentials

- B. SQL Server Configuration Manager with local administrative credentials

- C. SQL Server Data Tools with local administrative permissions

- D. SQL Server Management Studio with administrative credentials

Answer: D

NEW QUESTION 16

HOTSPOT

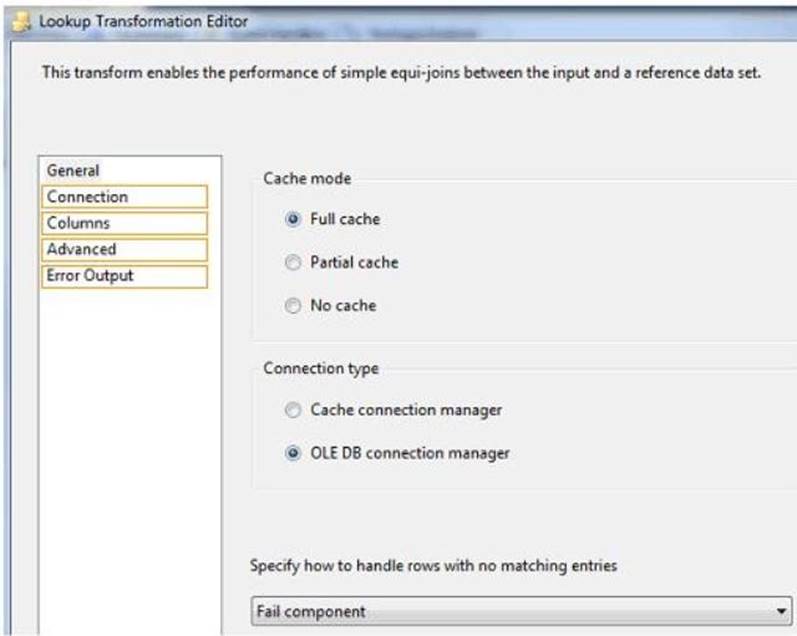

You are developing a data flow to load sales data into a fact table. In the data flow, you configure a Lookup Transformation in full cache mode to look up the product data for the sale.

The lookup source for the product data is contained in two tables.

You need to set the data source for the lookup to be a query that combines the two tables. Which page of the Lookup Transformation Editor should you select to configure the query? To answer, select the appropriate page in the answer area.

Answer:

Explanation: References:

http://msdn.microsoft.com/en-us/library/ms141821.aspx http://msdn.microsoft.com/en-us/library/ms189697.aspx

NEW QUESTION 17

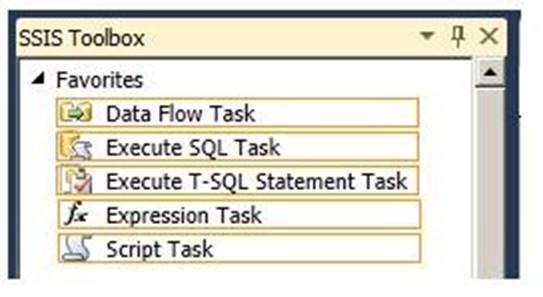

HOTSPOT

You are developing a SQL Server Integration Services (SSIS) package.

The package must run a parameterized query against a Windows Azure SQL Database database. You need to use the least amount of development effort to meet the package requirement. Which task should you use? (To answer, select the appropriate task in the answer area.)

Answer:

Explanation: Running Parameterized SQL Commands

SQL statements and stored procedures frequently use input parameters, output parameters, and return codes. The Execute SQL task supports the Input, Output, and ReturnValue parameter types. You use the Input type for input parameters, Output for output parameters, and ReturnValue for return codes.

Ref: http://msdn.microsoft.com/en-us/library/ms141003.aspx

In SSIS there are two tasks than can be used to execute SQL statements: Execute T-SQL Statement and Execute SQL. What is the difference between the two?

The Execute T-SQL Statement task tasks less memory, parse time, and CPU time than the Execute SQL task, but is not as filexible. If you need to run parameterized queries, save the query results to

variables, or use property expressions, you should use the Execute SQL task instead of the Execute TSQL Statement task.

Ref: http://www.sqlservercentral.com/blogs/jamesserra/2012/11/08/ssis-execute-sql-task-vsexecute- t-sql-statement-task/

NEW QUESTION 18

You are using the Knowledge Discovery feature of the Data Quality Services (DQS) client application to modify an existing knowledge base.

In the mapping configuration, two of the three columns are mapped to existing domains in the knowledge base. The third column, named Group, does not yet have a domain.

You need to complete the mapping of the Group column. What should you do?

- A. Map a composite domain to the source column.

- B. Create a composite domain that includes the Group column.

- C. Add a domain for the Group column.

- D. Add a column mapping for the Group column.

Answer: C

P.S. Easily pass 70-463 Exam with 270 Q&As Certleader Dumps & pdf Version, Welcome to Download the Newest Certleader 70-463 Dumps: https://www.certleader.com/70-463-dumps.html (270 New Questions)