We provide in two formats. Download PDF & Practice Tests. Pass Microsoft 70-767 Exam quickly & easily. The 70-767 PDF type is available for reading and printing. You can print more and practice many times. With the help of our product and material, you can easily pass the 70-767 exam.

Free 70-767 Demo Online For Microsoft Certifitcation:

NEW QUESTION 1

You need to load data from a CSV file to a table.

How should you complete the Transact-SQL statement? To answer, drag the appropriate Transact-SQL segments to the correct locations. Each Transact-SQL segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation: The Merge transformation combines two sorted datasets into a single dataset. The rows from each dataset are inserted into the output based on values in their key columns.

By including the Merge transformation in a data flow, you can merge data from two data sources, such as tables and files.

References:

https://docs.microsoft.com/en-us/sql/integration-services/data-flow/transformations/merge-transformation?view

NEW QUESTION 2

You manage Master Data Services (MDS).

You need to create a new entity with the following requirements:

• Maximize the performance of the MDS system.

• Ensure that the Entity change logs are stored.

You need to configure the Transaction Log Type setting. Which type should you use?

- A. Full

- B. None

- C. Attribute

- D. Member

- E. Simple

Answer: D

NEW QUESTION 3

Note: This question is part of a series of questions that use the same scenario. For your convenience, the scenario is repeated in each question. Each question presents a different goal and answer choices, but the text of the scenario is exactly the same in each question in the series.

Start of repeated scenario

Contoso. Ltd. has a Microsoft SQL Server environment that includes SQL Server Integration Services (SSIS), a data warehouse, and SQL Server Analysis Services (SSAS) Tabular and multidimensional models.

The data warehouse stores data related to your company sales, financial transactions and financial budgets All data for the data warenouse originates from the company's business financial system.

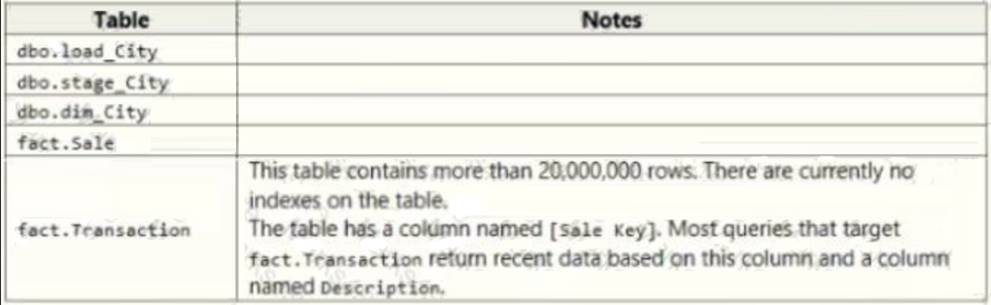

The data warehouse includes the following tables:

The company plans to use Microsoft Azure to store older records from the data warehouse. You must modify the database to enable the Stretch Database capability.

Users report that they are becoming confused about which city table to use for various queries. You plan to create a new schema named Dimension and change the name of the dbo.du_city table to Diamension.city. Data loss is not permissible, and you must not leave traces of the old table in the data warehouse.

Pal to create a measure that calculates the profit margin based on the existing measures.

You must improve performance for queries against the fact.Transaction table. You must implement appropriate indexes and enable the Stretch Database capability.

End of repeated scenario

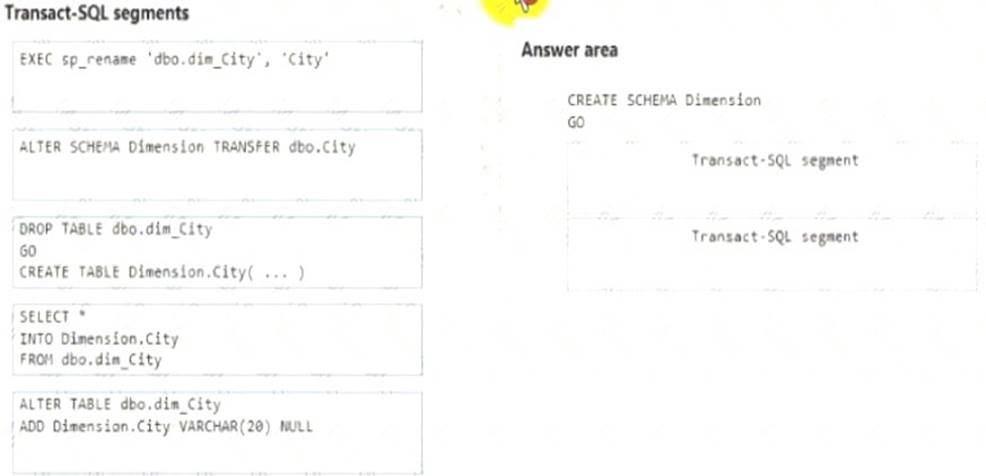

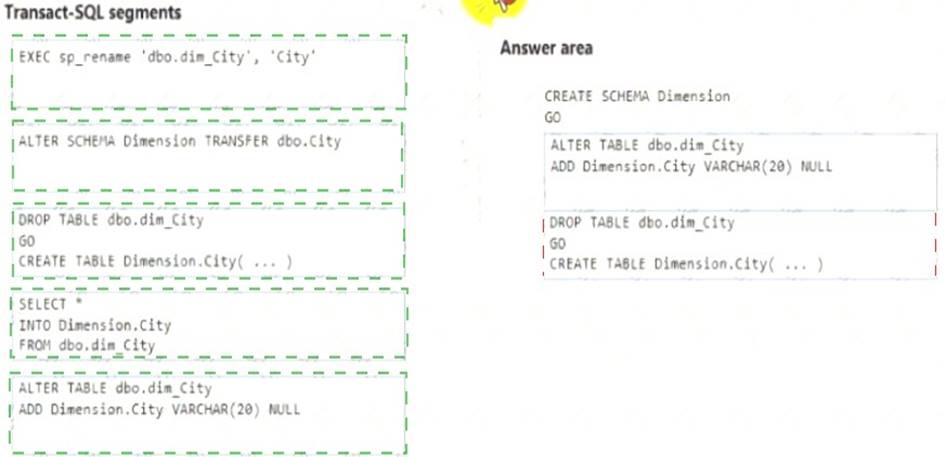

You need to resolve the problems reported about the dia city table.

How should you complete the Transact-SQL statement? To answer, drag the appropriate Transact-SQL segments to the correct locations. Each Transact-SQL segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

Answer:

Explanation:

NEW QUESTION 4

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a Microsoft SQL server that has Data Quality Services (DQS) installed.

You need to review the completeness and the uniqueness of the data stored in the matching policy. Solution: You profile the data.

Does this meet the goal?

- A. Yes

- B. No

Answer: B

Explanation: Use a matching rule. References:

https://docs.microsoft.com/en-us/sql/data-quality-services/create-a-matching-policy?view=sql-server-2021

NEW QUESTION 5

You have a Microsoft SQL Server Integration Services (SSIS) package that contains a Data Flow task as shown in the Data Flow exhibit. (Click the Exhibit button.)

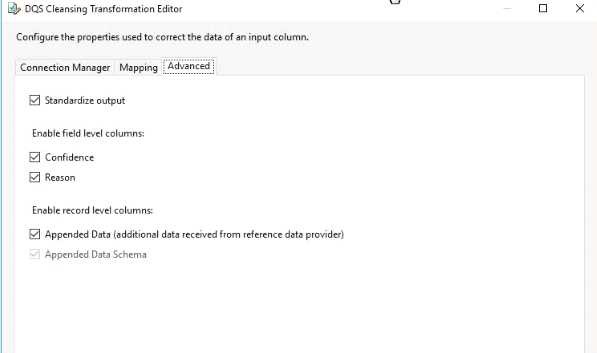

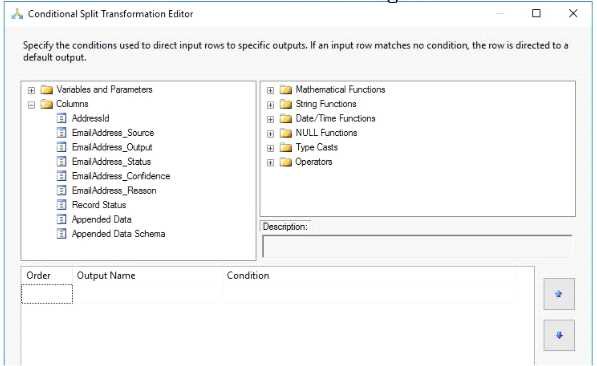

You install Data Quality Services (DQS) on the same server that hosts SSIS and deploy a knowledge base to manage customer email addresses. You add a DQS Cleansing transform to the Data Flow as shown in the Cleansing exhibit. (Click the Exhibit button.)

You create a Conditional Split transform as shown in the Splitter exhibit. (Click the Exhibit button.)

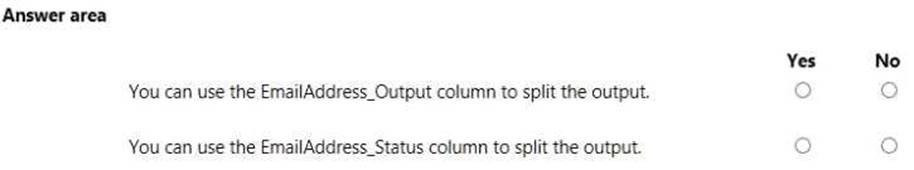

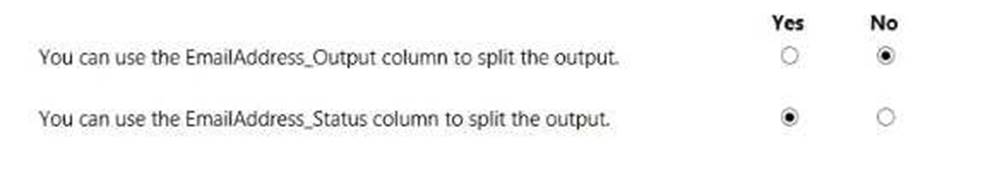

You need to split the output of the DQ5 Cleansing task to obtain only Correct values from the EmailAddress column. For each of the following statements, select Yes if the statement is true. Otherwise, select No.

Answer:

Explanation:

NEW QUESTION 6

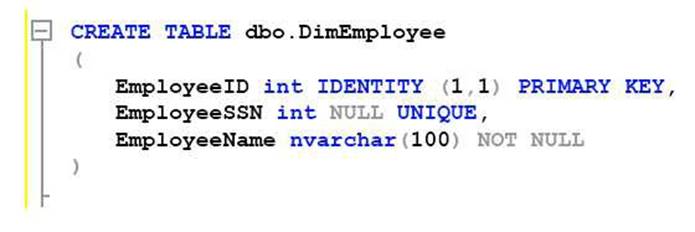

You manage a data warehouse in a Microsoft SQL Server instance. Company employee information is imported from the human resources system to a table named Employee in the data warehouse instance. The Employee table was created by running the query shown in the Employee Schema exhibit. (Click the Exhibit button.)

The personal identification number is stored in a column named EmployeeSSN. All values in the EmployeeSSN column must be unique.

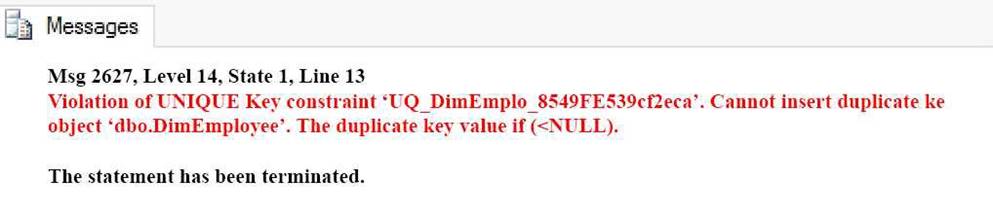

When importing employee data, you receive the error message shown in the SQL Error exhibit. (Click the Exhibit button.).

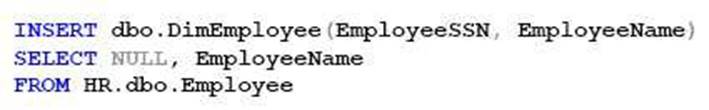

You determine that the Transact-SQL statement shown in the Data Load exhibit in the cause of the error. (Click the Exhibit button.)

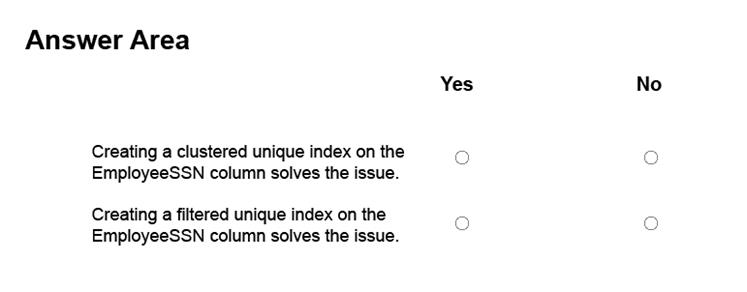

You remove the constraint on the EmployeeSSN column. You need to ensure that values in the EmployeeSSN column are unique.

For each of the following statements, select Yes if the statement is true. Otherwise, select No. NOTE: Each correct selection is worth one point.

Answer:

Explanation: With the ANSI standards SQL:92, SQL:1999 and SQL:2003, an UNIQUE constraint must disallow duplicate non-NULL values but accept multiple NULL values.

In the Microsoft world of SQL Server however, a single NULL is allowed but multiple NULLs are not. From SQL Server 2008, you can define a unique filtered index based on a predicate that excludes NULLs. References:

https://stackoverflow.com/questions/767657/how-do-i-create-a-unique-constraint-that-also-allows-nulls

NEW QUESTION 7

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You are designing a data warehouse and the load process for the data warehouse.

You have a source system that contains two tables named Table1 and Table2. All the rows in each table have a corresponding row in the other table.

The primary key for Table1 is named Key1. The primary key for Table2 is named Key2.

You need to combine both tables into a single table named Table3 in the data warehouse. The solution must ensure that all the nonkey columns in Table1 and Table2 exist in Table3. Which component should you use to load the data to the data warehouse?

- A. the Slowly Changing Dimension transformation

- B. the Conditional Split transformation

- C. the Merge transformation

- D. the Data Conversion transformation

- E. an Execute SQL task

- F. the Aggregate transformation

- G. the Lookup transformation

Answer: G

Explanation: The Lookup transformation performs lookups by joining data in input columns with columns in a reference dataset. You use the lookup to access additional information in a related table that is based on values in common columns.

You can configure the Lookup transformation in the following ways: Specify joins between the input and the reference dataset.

Add columns from the reference dataset to the Lookup transformation output. Etc.

NEW QUESTION 8

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You have a database named DB1 that has change data capture enabled.

A Microsoft SQL Server Integration Services (SSIS) job runs once weekly. The job loads changes from DB1 to a data warehouse by querying the change data capture tables.

You discover that the job loads changes from the previous three days only. You need re ensure that the job loads changes from the previous week. Which stored procedure should you execute?

- A. catalog.deploy_project

- B. catalog.restore_project

- C. catalog.stop.operation

- D. sys.sp_cdc.addJob

- E. sys.sp.cdc.changejob

- F. sys.sp_cdc_disable_db

- G. sys.sp_cdc_enable_db

- H. sys.sp_cdc.stopJob

Answer: A

Explanation: catalog.deploy_project deploys a project to a folder in the Integration Services catalog or updates an existing project that has been deployed previously.

References:

https://docs.microsoft.com/en-us/sql/integration-services/system-stored-procedures/catalog-deploy-project-ssisd

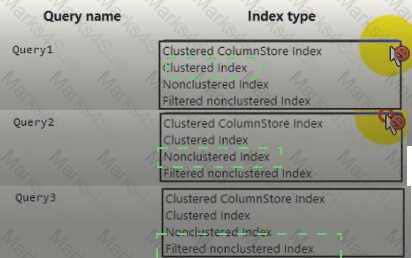

NEW QUESTION 9

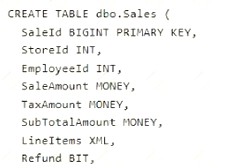

You have a database Ihat includes a table named dbo.sales. The table contains two billion rows. You created the table by running the following Transact-SQL statement:

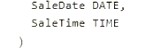

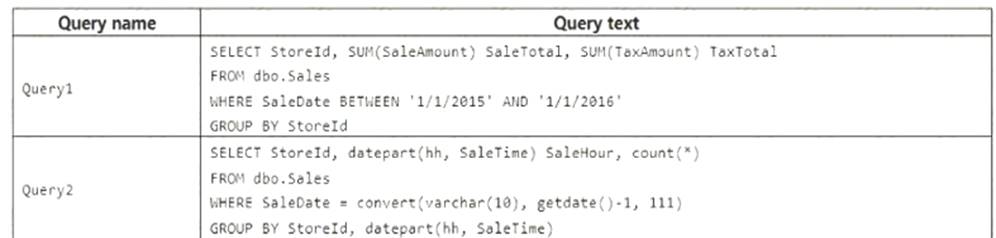

You run the following queries against the dbo.sales table. All of the queries perform poorly.

The ETL process that populates the table uses bulk insert to load 10 million rows each day. The process currently takes six hours to load the records.

The value of the Refund column is equal to 1 for only 0.01 percent of the rows in the table. For all other rows, the value of the Refund column is equal to 0.

You need to maximize the performance of queries and the ETL process.

Which index type should you use for each query? To answer, select the appropriate index types in the answer area.

NOTE: Each correct selection is worth one point.

Answer:

Explanation:

NEW QUESTION 10

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You have a database named DB1 that has change data capture enabled.

A Microsoft SQL Server Integration Services (SSIS) job runs once weekly. The job loads changes from DB1 to a data warehouse by querying the change data capture tables.

Users report that an application that uses DB1 is suddenly unresponsive.

You discover that the Integration Services job causes severe blocking issues in the application. You need to ensure that the users can run the application as quickly as possible. Your SQL Server login is a member of only the ssis.admin database role.

Which stored procedure should you execute?

- A. catalog.deploy_project

- B. catalog.restore_project

- C. catalog.stop.operation

- D. sys.sp.cdc.addjob

- E. sys.sp.cdc.changejob

- F. sys.sp_cdc_disable_db

- G. sys.sp_cdc_enable_db

- H. sys.sp_cdc.stopJob

Answer: E

Explanation: sys.sp_cdc_change_job modifies the configuration of a change data capture cleanup or capture job in the current database.

References:

https://docs.microsoft.com/en-us/sql/relational-databases/system-stored-procedures/sys-sp-cdc-change-job-trans

NEW QUESTION 11

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You have a database named DB1 that has change data capture enabled.

A Microsoft SQL Server Integration Services (SSIS) job runs once weekly. The job loads changes from DB1 to a data warehouse by querying the change data capture tables.

You remove the Integration Services job.

You need to stop tracking changes to the database. The solution must remove all the change data capture configurations from DB1.

Which stored procedure should you execute?

- A. catalog.deploy_project

- B. catalog.restore_project

- C. catalog.stop.operation

- D. sys.sp.cdc.addjob

- E. sys.sp.cdc.changejob

- F. sys.sp_cdc_disable_db

- G. sys.sp_cdc_enable_db

- H. sys.sp_cdc.stopJob

Answer: F

NEW QUESTION 12

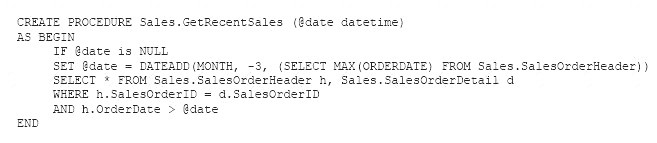

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this sections, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have a data warehouse that stores information about products, sales, and orders for a manufacturing company. The instance contains a database that has two tables named SalesOrderHeader and SalesOrderDetail. SalesOrderHeader has 500,000 rows and SalesOrderDetail has 3,000,000 rows.

Users report performance degradation when they run the following stored procedure:

You need to optimize performance.

Solution: You run the following Transact-SQL statement:

Does the solution meet the goal?

- A. Yes

- B. No

Answer: A

Explanation: You can specify the sample size as a percent. A 5% statistics sample size would be helpful.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-statistics

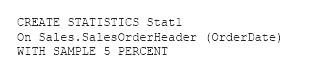

NEW QUESTION 13

A company plans to load data from a CSV file that is stored in a Microsoft Azure Blob storage container. You need to load the data.

How should you complete the Transact-SQL statement? To answer, drag the appropriate Transact-SQL segments to the correct locations. Each Transact-SQL segment may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.

NOTE: Each correct selection is worth one point.

Answer:

Explanation: Create DATABASE CREATE EXTERNAL

BULK invoice From invoice.csv

NEW QUESTION 14

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it As a result these questions will not appear in the review screen.

You are the administrator of a Microsoft SQL Server Master Data Services (MDS) instance. The instance contains a model named Geography and a model named customer. The Geography model contains an entity named countryRegion.

You need to ensure that the countryRegion entity members are available in the customer model.

Solution: In the Customer model, add a domain-based attribute to reference the CountryRegion entity in the Geography model.

Does the solution meet the goal?

- A. Yes

- B. No

Answer: A

NEW QUESTION 15

Note: This question is part of a series of questions that use the same or similar answer choices. An answer choice may be correct for more than one question in the series. Each question is independent of the other questions in this series. Information and details provided in a question apply only to that question.

You are loading data from an OLTP database to a data warehouse. The database contains a table named Sales.

Sales contains details of records that have a type of refund and records that have a type of sales. The data warehouse design contains a table for sales data and a table for refund data.

Which component should you use to load the data to the warehouse?

- A. the Slowly Changing Dimension transformation

- B. the Conditional Split transformation

- C. the Merge transformation

- D. the Data Conversion transformation

- E. an Execute SQL task

- F. the Aggregate transformation

- G. the Lookup transformation

Answer: B

Explanation: The Conditional Split transformation can route data rows to different outputs depending on the content of the data. The implementation of the Conditional Split transformation is similar to a CASE decision structure in a programming language. The transformation evaluates expressions, and based on the results, directs the data row to the specified output. This transformation also provides a default output, so that if a row matches no expression it is directed to the default output.

References:

https://docs.microsoft.com/en-us/sql/integration-services/data-flow/transformations/conditionalsplit-

Transformation

NEW QUESTION 16

Note: This question is part of a series of questions that use the same scenario. For your convenience, the scenario is repeated in each question. Each question presents a different goal and answer choices, but the text of the scenario is exactly the same in each question in this series.

You have a Microsoft SQL Server data warehouse instance that supports several client applications. The data warehouse includes the following tables: Dimension.SalesTerritory, Dimension.Customer,

Dimension.Date, Fact.Ticket, and Fact.Order. The Dimension.SalesTerritory and Dimension.Customer tables are frequently updated. The Fact.Order table is optimized for weekly reporting, but the company wants to change it daily. The Fact.Order table is loaded by using an ETL process. Indexes have been added to the table over time, but the presence of these indexes slows data loading.

All data in the data warehouse is stored on a shared SAN. All tables are in a database named DB1. You have a second database named DB2 that contains copies of production data for a development environment. The data warehouse has grown and the cost of storage has increased. Data older than one year is accessed infrequently and is considered historical.

You have the following requirements: Implement table partitioning to improve the manageability of the data warehouse and to avoid the need to repopulate all transactional data each night. Use a partitioning strategy that is as granular as possible.

Implement table partitioning to improve the manageability of the data warehouse and to avoid the need to repopulate all transactional data each night. Use a partitioning strategy that is as granular as possible. - Partition the Fact.Order table and retain a total of seven years of data.

- Partition the Fact.Order table and retain a total of seven years of data. - Partition the Fact.Ticket table and retain seven years of data. At the end of each month, the partition structure must apply a sliding window strategy to ensure that a new partition is available for the upcoming month, and that the oldest month of data is archived and removed.

- Partition the Fact.Ticket table and retain seven years of data. At the end of each month, the partition structure must apply a sliding window strategy to ensure that a new partition is available for the upcoming month, and that the oldest month of data is archived and removed. - Optimize data loading for the Dimension.SalesTerritory, Dimension.Customer, and Dimension.Date tables.

- Optimize data loading for the Dimension.SalesTerritory, Dimension.Customer, and Dimension.Date tables. - Maximize the performance during the data loading process for the Fact.Order partition.

- Maximize the performance during the data loading process for the Fact.Order partition. - Ensure that historical data remains online and available for querying.

- Ensure that historical data remains online and available for querying. - Reduce ongoing storage costs while maintaining query performance for current data. You are not permitted to make changes to the client applications.

- Reduce ongoing storage costs while maintaining query performance for current data. You are not permitted to make changes to the client applications.

You need to configure the Fact.Order table.

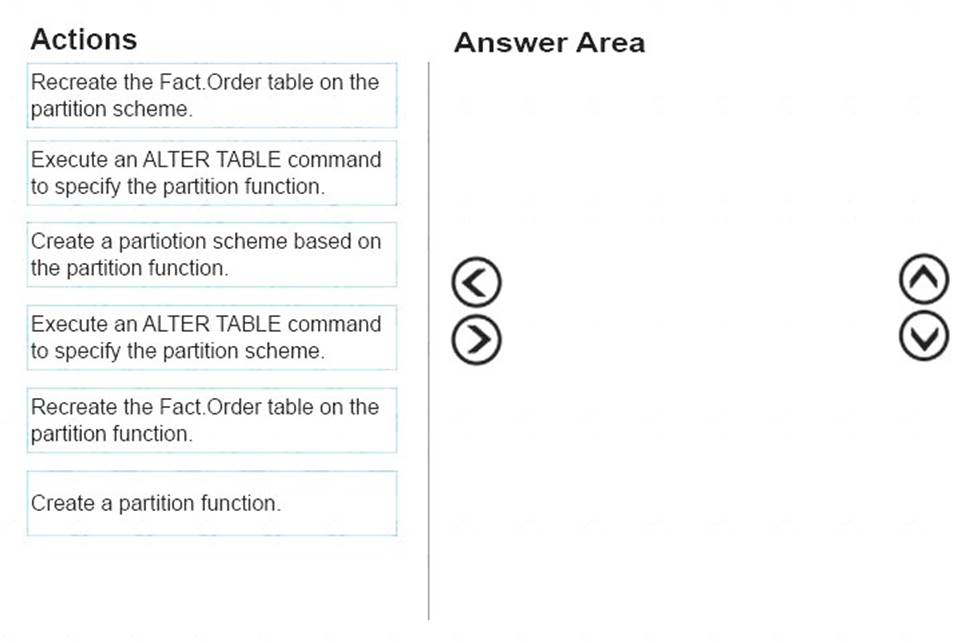

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Answer:

Explanation: From scenario: Partition the Fact.Order table and retain a total of seven years of data. Maximize the performance during the data loading process for the Fact.Order partition.

Step 1: Create a partition function.

Using CREATE PARTITION FUNCTION is the first step in creating a partitioned table or index. Step 2: Create a partition scheme based on the partition function.

To migrate SQL Server partition definitions to SQL Data Warehouse simply: Step 3: Execute an ALTER TABLE command to specify the partition function.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-partition

P.S. Certleader now are offering 100% pass ensure 70-767 dumps! All 70-767 exam questions have been updated with correct answers: https://www.certleader.com/70-767-dumps.html (109 New Questions)