we provide Actual Cloudera CCA-500 book which are the best for clearing CCA-500 test, and to get certified by Cloudera Cloudera Certified Administrator for Apache Hadoop (CCAH). The CCA-500 Questions & Answers covers all the knowledge points of the real CCA-500 exam. Crack your Cloudera CCA-500 Exam with latest dumps, guaranteed!

Online CCA-500 free questions and answers of New Version:

NEW QUESTION 1

Which two features does Kerberos security add to a Hadoop cluster?(Choose two)

- A. User authentication on all remote procedure calls (RPCs)

- B. Encryption for data during transfer between the Mappers and Reducers

- C. Encryption for data on disk (“at rest”)

- D. Authentication for user access to the cluster against a central server

- E. Root access to the cluster for users hdfs and mapred but non-root access for clients

Answer: AD

NEW QUESTION 2

You want to understand more about how users browse your public website. For example, you want to know which pages they visit prior to placing an order. You have a server farm of 200 web servers hosting your website. Which is the most efficient process to gather these web server across logs into your Hadoop cluster analysis?

- A. Sample the web server logs web servers and copy them into HDFS using curl

- B. Ingest the server web logs into HDFS using Flume

- C. Channel these clickstreams into Hadoop using Hadoop Streaming

- D. Import all user clicks from your OLTP databases into Hadoop using Sqoop

- E. Write a MapReeeduce job with the web servers for mappers and the Hadoop cluster nodes for reducers

Answer: B

Explanation:

Apache Flume is a service for streaming logs into Hadoop.

Apache Flume is a distributed, reliable, and available service for efficiently collecting, aggregating, and moving large amounts of streaming data into the Hadoop Distributed File System (HDFS). It has a simple and flexible architecture based on streaming data flows; and is robust and fault tolerant with tunable reliability mechanisms for failover and recovery.

NEW QUESTION 3

You have A 20 node Hadoop cluster, with 18 slave nodes and 2 master nodes running HDFS High Availability (HA). You want to minimize the chance of data loss in your cluster. What should you do?

- A. Add another master node to increase the number of nodes running the JournalNode which increases the number of machines available to HA to create a quorum

- B. Set an HDFS replication factor that provides data redundancy, protecting against node failure

- C. Run a Secondary NameNode on a different master from the NameNode in order to provide automatic recovery from a NameNode failure.

- D. Run the ResourceManager on a different master from the NameNode in order to load- share HDFS metadata processing

- E. Configure the cluster’s disk drives with an appropriate fault tolerant RAID level

Answer: D

NEW QUESTION 4

You have installed a cluster HDFS and MapReduce version 2 (MRv2) on YARN. You have no dfs.hosts entry(ies) in your hdfs-site.xml configuration file. You configure a new worker node by setting fs.default.name in its configuration files to point to the NameNode on your cluster, and you start the DataNode daemon on that worker node. What do you have to do on the cluster to allow the worker node to join, and start sorting HDFS blocks?

- A. Without creating a dfs.hosts file or making any entries, run the commands hadoop.dfsadmin-refreshModes on the NameNode

- B. Restart the NameNode

- C. Creating a dfs.hosts file on the NameNode, add the worker Node’s name to it, then issue the command hadoop dfsadmin –refresh Nodes = on the Namenode

- D. Nothing; the worker node will automatically join the cluster when NameNode daemon is started

Answer: A

NEW QUESTION 5

You are working on a project where you need to chain together MapReduce, Pig jobs. You also need the ability to use forks, decision points, and path joins. Which ecosystem project should you use to perform these actions?

- A. Oozie

- B. ZooKeeper

- C. HBase

- D. Sqoop

- E. HUE

Answer: A

NEW QUESTION 6

You decide to create a cluster which runs HDFS in High Availability mode with automatic failover, using Quorum Storage. What is the purpose of ZooKeeper in such a configuration?

- A. It only keeps track of which NameNode is Active at any given time

- B. It monitors an NFS mount point and reports if the mount point disappears

- C. It both keeps track of which NameNode is Active at any given time, and manages the Edits fil

- D. Which is a log of changes to the HDFS filesystem

- E. If only manages the Edits file, which is log of changes to the HDFS filesystem

- F. Clients connect to ZooKeeper to determine which NameNode is Active

Answer: A

Explanation:

Reference: Reference:http://www.cloudera.com/content/cloudera-content/cloudera-docs/CDH4/latest/PDF/CDH4-High-Availability-Guide.pdf(page 15)

NEW QUESTION 7

Your cluster is configured with HDFS and MapReduce version 2 (MRv2) on YARN. What is the result when you execute: hadoop jar SampleJar MyClass on a client machine?

- A. SampleJar.Jar is sent to the ApplicationMaster which allocates a container for SampleJar.Jar

- B. Sample.jar is placed in a temporary directory in HDFS

- C. SampleJar.jar is sent directly to the ResourceManager

- D. SampleJar.jar is serialized into an XML file which is submitted to the ApplicatoionMaster

Answer: A

NEW QUESTION 8

Your cluster has the following characteristics:

✑ A rack aware topology is configured and on

✑ Replication is set to 3

✑ Cluster block size is set to 64MB

Which describes the file read process when a client application connects into the cluster and requests a 50MB file?

- A. The client queries the NameNode for the locations of the block, and reads all three copie

- B. The first copy to complete transfer to the client is the one the client reads as part of hadoop’s speculative execution framework.

- C. The client queries the NameNode for the locations of the block, and reads from the first location in the list it receives.

- D. The client queries the NameNode for the locations of the block, and reads from a random location in the list it receives to eliminate network I/O loads by balancing which nodes it retrieves data from any given time.

- E. The client queries the NameNode which retrieves the block from the nearest DataNode to the client then passes that block back to the client.

Answer: B

NEW QUESTION 9

Identify two features/issues that YARN is designated to address:(Choose two)

- A. Standardize on a single MapReduce API

- B. Single point of failure in the NameNode

- C. Reduce complexity of the MapReduce APIs

- D. Resource pressure on the JobTracker

- E. Ability to run framework other than MapReduce, such as MPI

- F. HDFS latency

Answer: DE

Explanation:

Reference:http://www.revelytix.com/?q=content/hadoop-ecosystem(YARN, first para)

NEW QUESTION 10

You are running a Hadoop cluster with a NameNode on host mynamenode. What are two ways to determine available HDFS space in your cluster?

- A. Run hdfs fs –du / and locate the DFS Remaining value

- B. Run hdfs dfsadmin –report and locate the DFS Remaining value

- C. Run hdfs dfs / and subtract NDFS Used from configured Capacity

- D. Connect to http://mynamenode:50070/dfshealth.jsp and locate the DFS remaining value

Answer: B

NEW QUESTION 11

Which two are features of Hadoop’s rack topology?(Choose two)

- A. Configuration of rack awareness is accomplished using a configuration fil

- B. You cannot use a rack topology script.

- C. Hadoop gives preference to intra-rack data transfer in order to conserve bandwidth

- D. Rack location is considered in the HDFS block placement policy

- E. HDFS is rack aware but MapReduce daemon are not

- F. Even for small clusters on a single rack, configuring rack awareness will improve performance

Answer: BC

NEW QUESTION 12

You have recently converted your Hadoop cluster from a MapReduce 1 (MRv1) architecture to MapReduce 2 (MRv2) on YARN architecture. Your developers are accustomed to specifying map and reduce tasks (resource allocation) tasks when they run jobs: A developer wants to know how specify to reduce tasks when a specific job runs. Which method should you tell that developers to implement?

- A. MapReduce version 2 (MRv2) on YARN abstracts resource allocation away from the idea of “tasks” into memory and virtual cores, thus eliminating the need for a developer to specify the number of reduce tasks, and indeed preventing the developer from specifying the number of reduce tasks.

- B. In YARN, resource allocations is a function of megabytes of memory in multiples of 1024m

- C. Thus, they should specify the amount of memory resource they need by executing –D mapreduce-reduces.memory-mb-2048

- D. In YARN, the ApplicationMaster is responsible for requesting the resource required for a specific launc

- E. Thus, executing –D yarn.applicationmaster.reduce.tasks=2 will specify that the ApplicationMaster launch two task contains on the worker nodes.

- F. Developers specify reduce tasks in the exact same way for both MapReduce version 1 (MRv1) and MapReduce version 2 (MRv2) on YAR

- G. Thus, executing –D mapreduce.job.reduces-2 will specify reduce tasks.

- H. In YARN, resource allocation is function of virtual cores specified by the ApplicationManager making requests to the NodeManager where a reduce task is handeled by a single container (and thus a single virtual core). Thus, the developer needs to specify the number of virtual cores to the NodeManager by executing –p yarn.nodemanager.cpu-vcores=2

Answer: D

NEW QUESTION 13

Table schemas in Hive are:

- A. Stored as metadata on the NameNode

- B. Stored along with the data in HDFS

- C. Stored in the Metadata

- D. Stored in ZooKeeper

Answer: B

NEW QUESTION 14

You use the hadoop fs –put command to add a file “sales.txt” to HDFS. This file is small enough that it fits into a single block, which is replicated to three nodes in your cluster (with a replicationfactor of 3). One of the nodes holding this file (a single block) fails. How will the cluster handle the replication of file in this situation?

- A. The file will remain under-replicated until the administrator brings that node back online

- B. The cluster will re-replicate the file the next time the system administrator reboots the NameNode daemon (as long as the file’s replication factor doesn’t fall below)

- C. This will be immediately re-replicated and all other HDFS operations on the cluster will halt until the cluster’s replication values are resorted

- D. The file will be re-replicated automatically after the NameNode determines it is under- replicated based on the block reports it receives from the NameNodes

Answer: D

NEW QUESTION 15

A slave node in your cluster has 4 TB hard drives installed (4 x 2TB). The DataNode is configured to store HDFS blocks on all disks. You set the value of the dfs.datanode.du.reserved parameter to 100 GB. How does this alter HDFS block storage?

- A. 25GB on each hard drive may not be used to store HDFS blocks

- B. 100GB on each hard drive may not be used to store HDFS blocks

- C. All hard drives may be used to store HDFS blocks as long as at least 100 GB in total is available on the node

- D. A maximum if 100 GB on each hard drive may be used to store HDFS blocks

Answer: B

NEW QUESTION 16

Assume you have a file named foo.txt in your local directory. You issue the following three commands:

Hadoop fs –mkdir input

Hadoop fs –put foo.txt input/foo.txt

Hadoop fs –put foo.txt input

What happens when you issue the third command?

- A. The write succeeds, overwriting foo.txt in HDFS with no warning

- B. The file is uploaded and stored as a plain file named input

- C. You get a warning that foo.txt is being overwritten

- D. You get an error message telling you that foo.txt already exists, and asking you if you would like to overwrite it.

- E. You get a error message telling you that foo.txt already exist

- F. The file is not written to HDFS

- G. You get an error message telling you that input is not a directory

- H. The write silently fails

Answer: CE

NEW QUESTION 17

You have just run a MapReduce job to filter user messages to only those of a selected geographical region. The output for this job is in a directory named westUsers, located just below your home directory in HDFS. Which command gathers these into a single file on your local file system?

- A. Hadoop fs –getmerge –R westUsers.txt

- B. Hadoop fs –getemerge westUsers westUsers.txt

- C. Hadoop fs –cp westUsers/* westUsers.txt

- D. Hadoop fs –get westUsers westUsers.txt

Answer: B

NEW QUESTION 18

You suspect that your NameNode is incorrectly configured, and is swapping memory to disk. Which Linux commands help you to identify whether swapping is occurring?(Select all that apply)

- A. free

- B. df

- C. memcat

- D. top

- E. jps

- F. vmstat

- G. swapinfo

Answer: ADF

Explanation:

Reference:http://www.cyberciti.biz/faq/linux-check-swap-usage-command/

NEW QUESTION 19

You have a cluster running with a FIFO scheduler enabled. You submit a large job A to the cluster, which you expect to run for one hour. Then, you submit job B to the cluster, which you expect to run a couple of minutes only.

You submit both jobs with the same priority.

Which two best describes how FIFO Scheduler arbitrates the cluster resources for job and its tasks?(Choose two)

- A. Because there is a more than a single job on the cluster, the FIFO Scheduler will enforce a limit on the percentage of resources allocated to a particular job at any given time

- B. Tasks are scheduled on the order of their job submission

- C. The order of execution of job may vary

- D. Given job A and submitted in that order, all tasks from job A are guaranteed to finish before all tasks from job B

- E. The FIFO Scheduler will give, on average, and equal share of the cluster resources over the job lifecycle

- F. The FIFO Scheduler will pass an exception back to the client when Job B is submitted, since all slots on the cluster are use

Answer: AD

NEW QUESTION 20

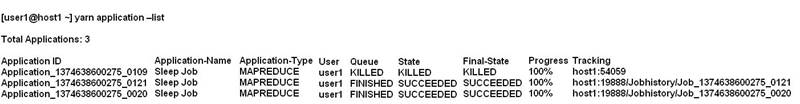

Given:

You want to clean up this list by removing jobs where the State is KILLED. What command you enter?

- A. Yarn application –refreshJobHistory

- B. Yarn application –kill application_1374638600275_0109

- C. Yarn rmadmin –refreshQueue

- D. Yarn rmadmin –kill application_1374638600275_0109

Answer: B

Explanation:

Reference:http://docs.hortonworks.com/HDPDocuments/HDP2/HDP-2.1-latest/bk_using-apache-hadoop/content/common_mrv2_commands.html

NEW QUESTION 21

You are running a Hadoop cluster with a NameNode on host mynamenode, a secondary NameNode on host mysecondarynamenode and several DataNodes.

Which best describes how you determine when the last checkpoint happened?

- A. Execute hdfs namenode –report on the command line and look at the Last Checkpoint information

- B. Execute hdfs dfsadmin –saveNamespace on the command line which returns to you the last checkpoint value in fstime file

- C. Connect to the web UI of the Secondary NameNode (http://mysecondary:50090/) and look at the “Last Checkpoint” information

- D. Connect to the web UI of the NameNode (http://mynamenode:50070) and look at the “Last Checkpoint” information

Answer: C

Explanation:

Reference:https://www.inkling.com/read/hadoop-definitive-guide-tom-white-3rd/chapter- 10/hdfs

NEW QUESTION 22

On a cluster running CDH 5.0 or above, you use the hadoop fs –put command to write a 300MB file into a previously empty directory using an HDFS block size of 64 MB. Just after this command has finished writing 200 MB of this file, what would another use see when they look in directory?

- A. The directory will appear to be empty until the entire file write is completed on the cluster

- B. They will see the file with a ._COPYING_ extension on its nam

- C. If they view the file, they will see contents of the file up to the last completed block (as each 64MB block is written, that block becomes available)

- D. They will see the file with a ._COPYING_ extension on its nam

- E. If they attempt to view the file, they will get a ConcurrentFileAccessException until the entire file write is completed on the cluster

- F. They will see the file with its original nam

- G. If they attempt to view the file, they will get a ConcurrentFileAccessException until the entire file write is completed on the cluster

Answer: B

NEW QUESTION 23

Which is the default scheduler in YARN?

- A. YARN doesn’t configure a default scheduler, you must first assign an appropriate scheduler class in yarn-site.xml

- B. Capacity Scheduler

- C. Fair Scheduler

- D. FIFO Scheduler

Answer: B

Explanation:

Reference:http://hadoop.apache.org/docs/r2.4.1/hadoop-yarn/hadoop-yarn-site/CapacityScheduler.html

NEW QUESTION 24

Which process instantiates user code, and executes map and reduce tasks on a cluster running MapReduce v2 (MRv2) on YARN?

- A. NodeManager

- B. ApplicationMaster

- C. TaskTracker

- D. JobTracker

- E. NameNode

- F. DataNode

- G. ResourceManager

Answer: A

NEW QUESTION 25

......

Thanks for reading the newest CCA-500 exam dumps! We recommend you to try the PREMIUM Certleader CCA-500 dumps in VCE and PDF here: https://www.certleader.com/CCA-500-dumps.html (60 Q&As Dumps)