Our pass rate is high to 98.9% and the similarity percentage between our SAP-C01 study guide and real exam is 90% based on our seven-year educating experience. Do you want achievements in the Amazon-Web-Services SAP-C01 exam in just one try? I am currently studying for the Amazon-Web-Services SAP-C01 exam. Latest Amazon-Web-Services SAP-C01 Test exam practice questions and answers, Try Amazon-Web-Services SAP-C01 Brain Dumps First.

Also have SAP-C01 free dumps questions for you:

NEW QUESTION 1

A Solutions Architect has created an AWS CloudFormation template for a three-tier application that contains an Auto Scaling group of Amazon EC2 instances running a custom AMI.

The Solutions Architect wants to ensure that future updates to the custom AMI can be deployed to a running stack by first updating the template to refer to the new AMI, and then invoking UpdateStack to replace the EC2 instances with instances launched from the new AMI.

How can updates to the AMI be deployed to meet these requirements?

- A. Create a change set for a new version of the template, view the changes to the running EC2 instances to ensure that the AMI is correctly updated, and then execute the change set.

- B. Edit the AWS::AutoScaling::LaunchConfiguration resource in the template, changing its DeletionPolicy to Replace.

- C. Edit the AWS::AutoScaling:: AutoScalingGroup resource in the template, inserting an UpdatePolicy attribute.

- D. Create a new stack from the updated templat

- E. Once it is successfully deployed, modify the DNS records to point to the new stack and delete the old stack.

Answer: C

Explanation:

References:

https://docs.aws.amazon.com/AWSCloudFormation/latest/UserGuide/aws-properties-as-launchconfig.html

NEW QUESTION 2

A company has developed a new billing application that will be released in two weeks. Developers are testing the application running on 10 EC2 instances managed by an Auto Scaling group in subnet 172.31.0.0/24 within VPC A with CIDR block 172.31.0.0/16. The Developers noticed connection timeout errors in the application logs while connecting to an Oracle database running on an Amazon EC2 instance in the same region within VPC B with CIDR block 172.50.0.0/16. The IP of the database instance is hard-coded in the application instances.

Which recommendations should a Solutions Architect present to the Developers to solve the problem in a secure way with minimal maintenance and overhead?

- A. Disable the SrcDestCheck attribute for all instances running the application and Oracle Database.Change the default route of VPC A to point ENI of the Oracle Database that has an IP address assigned within the range of 172.50.0.0/26

- B. Create and attach internet gateways for both VPC

- C. Configure default routes to the Internet gateways for both VPC

- D. Assign an Elastic IP for each Amazon EC2 instance in VPC A

- E. Create a VPC peering connection between the two VPCs and add a route to the routing table of VPC A that points to the IP address range of 172.50.0.0/16

- F. Create an additional Amazon EC2 instance for each VPC as a customer gateway; create one virtual private gateway (VGW) for each VPC, configure an end-to-end VPC, and advertise the routes for 172.50.0.0/16

Answer: C

NEW QUESTION 3

A company wants to migrate its website from an on-premises data center onto AWS. At the same time, it wants to migrate the website to a containerized microservice-based architecture to improve the availability and cost efficiency. The company’s security policy states that privileges and network permissions must be configured according to best practice, using least privilege.

A Solutions Architect must create a containerized architecture that meets the security requirements and has deployed the application to an Amazon ECS cluster.

What steps are required after the deployment to meet the requirements? (Choose two.)

- A. Create tasks using the bridge network mode.

- B. Create tasks using the awsvpc network mode.

- C. Apply security groups to Amazon EC2 instances, and use IAM roles for EC2 instances to access other resources.

- D. Apply security groups to the tasks, and pass IAM credentials into the container at launch time to access other resources.

- E. Apply security groups to the tasks, and use IAM roles for tasks to access other resources.

Answer: BE

Explanation:

https://aws.amazon.com/about-aws/whats-new/2021/11/amazon-ecs-introduces-awsvpc-networking-mode-for-c

https://amazonaws-china.com/blogs/compute/introducing-cloud-native-networking-for-ecs-containers/ https://docs.aws.amazon.com/AmazonECS/latest/developerguide/task-iam-roles.html

NEW QUESTION 4

A company that provides wireless services needs a solution to store and analyze log files about user activities. Currently, log files are delivered daily to Amazon Linux on Amazon EC2 instance. A batch script is run once a day to aggregate data used for analysis by a third-party tool. The data pushed to the third-party tool is used to generate a visualization for end users. The batch script is cumbersome to maintain, and it takes several hours to deliver the ever-increasing data volumes to the third-party tool. The company wants to lower costs, and is open to considering a new tool that minimizes development effort and lowers administrative overhead. The company wants to build a more agile solution that can store and perform the analysis in near-real time, with minimal overhead. The solution needs to be cost effective and scalable to meet the company’s end-user base growth.

Which solution meets the company’s requirements?

- A. Develop a Python script to failure the data from Amazon EC2 in real time and store the data in Amazon S3. Use a copy command to copy data from Amazon S3 to Amazon Redshif

- B. Connect a business intelligence tool running on Amazon EC2 to Amazon Redshift and create the visualizations.

- C. Use an Amazon Kinesis agent running on an EC2 instance in an Auto Scaling group to collect and send the data to an Amazon Kinesis Data Forehose delivery strea

- D. The Kinesis Data Firehose delivery stream will deliver the data directly to Amazon E

- E. Use Kibana to visualize the data.

- F. Use an in-memory caching application running on an Amazon EBS-optimized EC2 instance to capture the log data in near real-tim

- G. Install an Amazon ES cluster on the same EC2 instance to store the log files as they are delivered to Amazon EC2 in near real-tim

- H. Install a Kibana plugin to create the visualizations.

- I. Use an Amazon Kinesis agent running on an EC2 instance to collect and send the data to an Amazon Kinesis Data Firehose delivery strea

- J. The Kinesis Data Firehose delivery stream will deliver the data to Amazon S3. Use an AWS Lambda function to deliver the data from Amazon S3 to Amazon E

- K. Use Kibana to visualize the data.

Answer: B

Explanation:

https://docs.aws.amazon.com/firehose/latest/dev/writing-with-agents.html

NEW QUESTION 5

A large company experienced a drastic increase in its monthly AWS spend. This is after Developers accidentally launched Amazon EC2 instances in unexpected regions. The company has established practices around least privileges for Developers and controls access to on-premises resources using Active Directory groups. The company now wants to control costs by restricting the level of access that Developers have to the AWS Management Console without impacting their productivity. The company would also like to allow Developers to launch Amazon EC2 in only one region, without limiting access to other services in any region.

How can this company achieve these new security requirements while minimizing the administrative burden on the Operations team?

- A. Set up SAML-based authentication tied to an IAM role that has an AdministrativeAccess managed policy attached to i

- B. Attach a customer managed policy that denies access to Amazon EC2 in each region except for the one required.

- C. Create an IAM user for each Developer and add them to the developer IAM group that has the PowerUserAccess managed policy attached to i

- D. Attach a customer managed policy that allows the Developers access to Amazon EC2 only in the required region.

- E. Set up SAML-based authentication tied to an IAM role that has a PowerUserAccess managed policy and a customer managed policy that deny all the Developers access to any AWS services except AWS Service Catalo

- F. Within AWS Service Catalog, create a product containing only the EC2 resources in the approved region.

- G. Set up SAML-based authentication tied to an IAM role that has the PowerUserAccess managed policy attached to i

- H. Attach a customer managed policy that denies access to Amazon EC2 in each region except for the one required.

Answer: D

Explanation:

The tricks here are: - SAML for AD federation and authentication - PowerUserAccess vs AdministrativeAccess. (PowerUSer has less privilege, which is the required once for developers). Admin, has more rights. The description of "PowerUser access" given by AWS is “Provides full access to AWS services and resources, but does not allow management of Users and groups.”

NEW QUESTION 6

A Solutions Architect must establish a patching plan for a large mixed fleet of Windows and Linux servers. The patching plan must be implemented securely, be audit ready, and comply with the company’s business requirements.

Which option will meet these requirements with MINIMAL effort?

- A. Install and use an OS-native patching service to manage the update frequency and release approval for all instance

- B. Use AWS Config to verify the OS state on each instance and report on any patch compliance issues.

- C. Use AWS Systems Manager on all instances to manage patchin

- D. Test patches outside of production and then deploy during a maintenance window with the appropriate approval.

- E. Use AWS OpsWorks for Chef Automate to run a set of scripts that will iterate through all instances of a given typ

- F. Issue the appropriate OS command to get and install updates on each instance, including any required restarts during the maintenance window.

- G. Migrate all applications to AWS OpsWorks and use OpsWorks automatic patching support to keep the OS up-to-date following the initial installatio

- H. Use AWS Config to provide audit and compliance reporting.

Answer: B

Explanation:

Only Systems Manager can patch both OS effectively on AWS and on premise.

NEW QUESTION 7

A company runs a dynamic mission-critical web application that has an SLA of 99.99%. Global application users access the application 24/7. The application is currently hosted on premises and routinely fails to meet its SLA, especially when millions of users access the application concurrently. Remote users complain of latency.

How should this application be redesigned to be scalable and allow for automatic failover at the lowest cost?

- A. Use Amazon Route 53 failover routing with geolocation-based routin

- B. Host the website on automatically scaled Amazon EC2 instances behind an Application Load Balancer with an additional Application Load Balancer and EC2 instances for the application layer in each regio

- C. Use a Multi-AZ deployment with MySQL as the data layer.

- D. Use Amazon Route 53 round robin routing to distribute the load evenly to several regions with health check

- E. Host the website on automatically scaled Amazon ECS with AWS Fargate technology containers behind a Network Load Balancer, with an additional Network Load Balancer and Fargate containers for the application layer in each regio

- F. Use Amazon Aurora replicas for the data layer.

- G. Use Amazon Route 53 latency-based routing to route to the nearest region with health check

- H. Host the website in Amazon S3 in each region and use Amazon API Gateway with AWS Lambda for the application laye

- I. Use Amazon DynamoDB global tables as the data layer with Amazon DynamoDB Accelerator (DAX) for caching.

- J. Use Amazon Route 53 geolocation-based routin

- K. Host the website on automatically scaled AWS Fargate containers behind a Network Load Balancer with an additional Network Load Balancer and Fargate containers for the application layer in each regio

- L. Use Amazon Aurora Multi-Master for Aurora MySQL as the data layer.

Answer: C

Explanation:

https://aws.amazon.com/getting-started/projects/build-serverless-web-app-lambda-apigateway-s3-dynamodb-co

NEW QUESTION 8

A company has deployed an application to multiple environments in AWS, including production and testing. The company has separate accounts for production and testing, and users are allowed to create additional application users for team members or services, as needed. The Security team has asked the Operations team for better isolation between production and testing with centralized controls on security credentials and improved management of permissions between environments.

Which of the following options would MOST securely accomplish this goal?

- A. Create a new AWS account to hold user and service accounts, such as an identity accoun

- B. Create users and groups in the identity accoun

- C. Create roles with appropriate permissions in the production and testing account

- D. Add the identity account to the trust policies for the roles.

- E. Modify permissions in the production and testing accounts to limit creating new IAM users to members of the Operations tea

- F. Set a strong IAM password policy on each accoun

- G. Create new IAM users and groups in each account to limit developer access to just the services required to complete their job function.

- H. Create a script that runs on each account that checks user accounts for adherence to a security policy.Disable any user or service accounts that do not comply.

- I. Create all user accounts in the production accoun

- J. Create roles for access in the production account and testing account

- K. Grant cross-account access from the production account to the testing account.

Answer: A

Explanation:

https://aws.amazon.com/blogs/security/how-to-centralize-and-automate-iam-policy-creation-in-sandbox-develop

NEW QUESTION 9

A company wants to move a web application to AWS. The application stores session information locally on each web server, which will make auto scaling difficult. As part of the migration, the application will be rewritten to decouple the session data from the web servers. The company requires low latency, scalability, and availability.

Which service will meet the requirements for storing the session information in the MOST cost-effective way?

- A. Amazon ElastiCache with the Memcached engine

- B. Amazon S3

- C. Amazon RDS MySQL

- D. Amazon ElastiCache with the Redis engine

Answer: D

Explanation:

https://aws.amazon.com/caching/session-management/ https://aws.amazon.com/elasticache/redis-vs-memcached/

NEW QUESTION 10

A company has been using a third-party provider for its content delivery network and recently decided to switch to Amazon CloudFront the Development team wants to maximize performance for the global user base. The company uses a content management system (CMS) that serves both static and dynamic content. The CMS is both md an Application Load Balancer (ALB) which is set as the default origin for the distribution. Static assets are served from an Amazon S3 bucket. The Origin Access Identity (OAI) was created property d the S3 bucket policy has been updated to allow the GetObject action from the OAI, but static assets are receiving a 404 error

Which combination of steps should the Solutions Architect take to fix the error? (Select TWO. )

- A. Add another origin to the CloudFront distribution for the static assets

- B. Add a path based rule to the ALB to forward requests for the static assets

- C. Add an RTMP distribution to allow caching of both static and dynamic content

- D. Add a behavior to the CloudFront distribution for the path pattern and the origin of the static assets

- E. Add a host header condition to the ALB listener and forward the header from CloudFront to add traffic to the allow list

Answer: AD

NEW QUESTION 11

A bank is designing an online customer service portal where customers can chat with customer service agents. The portal is required to maintain a 15-minute RPO or RTO in case of a regional disaster. Banking regulations require that all customer service chat transcripts must be preserved on durable storage for at least 7 years, chat conversations must be encrypted in-flight, and transcripts must be encrypted at rest. The Data Lost Prevention team requires that data at rest must be encrypted using a key that the team controls, rotates, and revokes.

Which design meets these requirements?

- A. The chat application logs each chat message into Amazon CloudWatch Log

- B. A scheduled AWS Lambda function invokes a CloudWatch Log

- C. CreateExportTask every 5 minutes to export chat transcripts to Amazon S3. The S3 bucket is configured for cross-region replication to the backup regio

- D. Separate AWS KMS keys are specified for the CloudWatch Logs group and the S3 bucket.

- E. The chat application logs each chat message into two different Amazon CloudWatch Logs groups in two different regions, with the same AWS KMS key applie

- F. Both CloudWatch Logs groups are configured to export logs into an Amazon Glacier vault with a 7-year vault lock policy with a KMS key specified.

- G. The chat application logs each chat message into Amazon CloudWatch Log

- H. A subscription filter on the CloudWatch Logs group feeds into an Amazon Kinesis Data Firehose which streams the chat messages into an Amazon S3 bucket in the backup regio

- I. Separate AWS KMS keys are specified for the CloudWatch Logs group and the Kinesis Data Firehose.

- J. The chat application logs each chat message into Amazon CloudWatch Log

- K. The CloudWatch Logs group is configured to export logs into an Amazon Glacier vault with a 7-year vault lock polic

- L. Glacier cross-region replication mirrors chat archives to the backup regio

- M. Separate AWS KMS keys are specified for the CloudWatch Logs group and the Amazon Glacier vault.

Answer: B

NEW QUESTION 12

A Solutions Architect has been asked to look at a company’s Amazon Redshift cluster, which has quickly become an integral part of its technology and supports key business process. The Solutions Architect is to increase the reliability and availability of the cluster and provide options to ensure that if an issue arises, the cluster can either operate or be restored within four hours.

Which of the following solution options BEST addresses the business need in the most cost-effective manner?

- A. Ensure that the Amazon Redshift cluster has been set up to make use of Auto Scaling groups with the nodes in the cluster spread across multiple Availability Zones.

- B. Ensure that the Amazon Redshift cluster creation has been template using AWS CloudFormation so it can easily be launched in another Availability Zone and data populated from the automated Redshift back-ups stored in Amazon S3.

- C. Use Amazon Kinesis Data Firehose to collect the data ahead of ingestion into Amazon Redshift and create clusters using AWS CloudFormation in another region and stream the data to both clusters.

- D. Create two identical Amazon Redshift clusters in different regions (one as the primary, one as the secondary). Use Amazon S3 cross-region replication from the primary to secondary). Use Amazon S3 cross-region replication from the primary to secondary region, which triggers an AWS Lambda function to populate the cluster in the secondary region.

Answer: B

Explanation:

https://aws.amazon.com/redshift/faqs/?nc1=h_ls Q: What happens to my data warehouse cluster availability and data durability if my data warehouse cluster's Availability Zone (AZ) has an outage? If your Amazon Redshift data warehouse cluster's Availability Zone becomes unavailable, you will not be able to use your cluster until power and network access to the AZ are restored. Your data warehouse cluster's data is preserved so you can start using your Amazon Redshift data warehouse as soon as the AZ becomes available again. In addition, you can also choose to restore any existing snapshots to a new AZ in the same Region. Amazon Redshift will restore your most frequently accessed data first so you can resume queries as quickly as possible.

FROM 37

NEW QUESTION 13

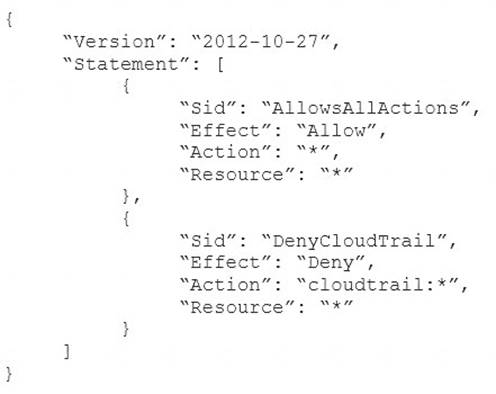

A company will several AWS accounts is using AWS Organizations and service control policies (SCPs). An Administrator created the following SCP and has attached it to an organizational unit (OU) that contains AWS account 1111-1111-1111:

Developers working in account 1111-1111-1111 complain that they cannot create Amazon S3 buckets. How should the Administrator address this problem?

- A. Add s3:CreateBucket with “Allow” effect to the SCP.

- B. Remove the account from the OU, and attach the SCP directly to account 1111-1111-1111.

- C. Instruct the Developers to add Amazon S3 permissions to their IAM entities.

- D. Remove the SCP from account 1111-1111-1111.

Answer: C

NEW QUESTION 14

A company is creating an account strategy so that they can begin using AWS. The Security team will provide each team with the permissions they need to follow the principle or least privileged access. Teams would like to keep their resources isolated from other groups, and the Finance team would like each team’s resource usage separated for billing purposes.

Which account creation process meets these requirements and allows for changes?

- A. Create a new AWS Organizations accoun

- B. Create groups in Active Directory and assign them to roles in AWS to grant federated acces

- C. Require each team to tag their resources, and separate bills based on tag

- D. Control access to resources through IAM granting the minimally required privilege.

- E. Create individual accounts for each tea

- F. Assign the security as the master account, and enable consolidated billing for all other account

- G. Create a cross-account role for security to manage accounts, and send logs to a bucket in the security account.

- H. Create a new AWS account, and use AWS Service Catalog to provide teams with the required resources.Implement a third-party billing to provide the Finance team with the resource use for each team based on taggin

- I. Isolate resources using IAM to avoid account spraw

- J. Security will control and monitor logs and permissions.

- K. Create a master account for billing using Organizations, and create each team’s account from that master accoun

- L. Create a security account for logs and cross-account acces

- M. Apply service control policies on each account, and grant the Security team cross-account access to all account

- N. Security will create IAM policies for each account to maintain least privilege access.

Answer: B

NEW QUESTION 15

A company wants to ensure that the workloads for each of its business units have complete autonomy and a minimal blast radius in AWS. The Security team must be able to control access to the resources and services in the account to ensure that particular services are not used by the business units.

How can a Solutions Architect achieve the isolation requirements?

- A. Create individual accounts for each business unit and add the account to an OU in AWS Organizations.Modify the OU to ensure that the particular services are blocke

- B. Federate each account with an IdP, and create separate roles for the business units and the Security team.

- C. Create individual accounts for each business uni

- D. Federate each account with an IdP and create separate roles and policies for business units and the Security team.

- E. Create one shared account for the entire compan

- F. Create separate VPCs for each business uni

- G. Create individual IAM policies and resource tags for each business uni

- H. Federate each account with an IdP, and create separate roles for the business units and the Security team.

- I. Create one shared account for the entire compan

- J. Create individual IAM policies and resource tags for each business uni

- K. Federate the account with an IdP, and create separate roles for the business units and the Security team.

Answer: A

NEW QUESTION 16

A company is designing a new highly available web application on AWS. The application requires consistent and reliable connectivity from the application servers in AWS to a backend REST API hosted in the company’s on-premises environment. The backend connection between AWS and on-premises will be routed over an AWS Direct Connect connection through a private virtual interface. Amazon Route 53 will be used to manage private DNS records for the application to resolve the IP address on the backend REST API.

Which design would provide a reliable connection to the backend API?

- A. Implement at least two backend endpoints for the backend REST API, and use Route 53 health checks to monitor the availability of each backend endpoint and perform DNS-level failover.

- B. Install a second Direct Connect connection from a different network carrier and attach it to the same virtual private gateway as the first Direct Connect connection.

- C. Install a second cross connect for the same Direct Connect connection from the same network carrier, and join both connections to the same link aggregation group (LAG) on the same private virtual interface.

- D. Create an IPSec VPN connection routed over the public internet from the on-premises data center to AWS and attach it to the same virtual private gateway as the Direct Connect connection.

Answer: A

NEW QUESTION 17

A company is migrating its on-premises build artifact server to an AWS solution. The current system consists of an Apache HTTP server that serves artifacts to clients on the local network, restricted by the perimeter firewall. The artifact consumers are largely build automation scripts that download artifacts via anonymous HTTP, which the company will be unable to modify within its migration timetable.

The company decides to move the solution to Amazon S3 static website hosting. The artifact consumers will be migrated to Amazon EC2 instances located within both public and private subnets in a virtual private cloud (VPC).

Which solution will permit the artifact consumers to download artifacts without modifying the existing automation scripts?

- A. Create a NAT gateway within a public subnet of the VP

- B. Add a default route pointing to the NAT gateway into the route table associated with the subnets containing consumer

- C. Configure the bucket policy to allow the s3:ListBucket and s3:GetObject actions using the condition IpAddress and the condition key aws:SourceIp matching the elastic IP address if the NAT gateway.

- D. Create a VPC endpoint and add it to the route table associated with subnets containing consumers.Configure the bucket policy to allow s3:ListBucket and s3:GetObject actions using the condition StringEquals and the condition key aws:sourceVpce matching the identification of the VPC endpoint.

- E. Create an IAM role and instance profile for Amazon EC2 and attach it to the instances that consume build artifact

- F. Configure the bucket policy to allow the s3:ListBucket and s3:GetObjects actions for theprincipal matching the IAM role created.

- G. Create a VPC endpoint and add it to the route table associated with subnets containing consumers.Configure the bucket policy to allow s3:ListBucket and s3:GetObject actions using the condition IpAddress and the condition key aws:SourceIp matching the VPC CIDR block.

Answer: B

NEW QUESTION 18

A company is currently running a production workload on AWS that is very I/O intensive. Its workload consists of a single tier with 10 c4.8xlarge instances, each with 2 TB gp2 volumes. The number of processing jobs has recently increased, and latency has increased as well. The team realizes that they are constrained on the IOPS. For the application to perform efficiently, they need to increase the IOPS by 3,000 for each of the instances.

Which of the following designs will meet the performance goal MOST cost effectively?

- A. Change the type of Amazon EBS volume from gp2 to io1 and set provisioned IOPS to 9,000.

- B. Increase the size of the gp2 volumes in each instance to 3 TB.

- C. Create a new Amazon EFS file system and move all the data to this new file syste

- D. Mount this file system to all 10 instances.

- E. Create a new Amazon S3 bucket and move all the data to this new bucke

- F. Allow each instance to access this S3 bucket and use it for storage.

Answer: B

NEW QUESTION 19

During an audit a Security team discovered that a Development team was putting IAM user secret access keys in their code and then committing it to an AWS CodeCommit repository The Security team wants to automatically find and remediate instances of this security vulnerability

Which solution will ensure that the credentials are appropriately secured automatically?

- A. Run a script rightly using AWS Systems Manager Run Command to search (or credentials on thedevelopment instances It found, use AWS Secrets Manager to rotate the credentials

- B. Use a scheduled AWS Lambda function to download and scan the application code from CodeCommit If credentials are found generate new credentials and store them in AWS KMS

- C. Configure Amazon Macie to scan for credentials in CodeCommit repositories If credentials are found, trigger an AWS Lambda function to disable the credentials and notify the user

- D. Configure a CodeCommit trigger to invoke an AWS Lambda function to scan new code submissions for credentials lf credentials are found, disable them in AWS IAM and notify the user

Answer: C

NEW QUESTION 20

A company has a data center that must be migrated to AWS as quickly as possible. The data center has a 500 Mbps AWS Direct Connect link and a separate, fully available 1 Gbps ISP connection. A Solutions Architect must transfer 20 TB of data from the data center to an Amazon S3 bucket.

What is the FASTEST way transfer the data?

- A. Upload the data to the S3 bucket using the existing DX link.

- B. Send the data to AWS using the AWS Import/Export service.

- C. Upload the data using an 80 TB AWS Snowball device.

- D. Upload the data to the S3 bucket using S3 Transfer Acceleration.

Answer: D

Explanation:

https://aws.amazon.com/s3/faqs/

NEW QUESTION 21

A company has implemented AWS Organizations. It has recently set up a number of new accounts and wants to deny access to a specific set of AWS services in these new accounts.

How can this be controlled MOST efficiently?

- A. Create an IAM policy in each account that denies access to the service

- B. Associate the policy with an IAM group, and add all IAM users to the group.

- C. Create a service control policy that denies access to the service

- D. Add all of the new accounts to a single organizations unit (OU), and apply the policy to that OU.

- E. Create an IAM policy in each account that denies access to the servic

- F. Associate the policy with an IAM role, and instruct users to log in using their corporate credentials and assume the IAM role.

- G. Create a service control policy that denies access to the services, and apply the policy to the root of the organization.

Answer: B

NEW QUESTION 22

A company is having issues with a newly deployed server less infrastructure that uses Amazon API Gateway, Amazon Lambda, and Amazon DynamoDB.

In a steady state, the application performs as expected However, during peak load, tens of thousands of simultaneous invocations are needed and user request fail multiple times before succeeding. The company has checked the logs for each component, focusing specifically on Amazon CloudWatch Logs for Lambda. There are no error logged by the services or applications.

What might cause this problem?

- A. Lambda has very memory assigned, which causes the function to fail at peak load.

- B. Lambda is in a subnet that uses a NAT gateway to reach out to the internet, and the function instance does not have sufficient Amazon EC2 resources in the VPC to scale with the load.

- C. The throttle limit set on API Gateway is very low during peak load, the additional requests are not making their way through to Lambda

- D. DynamoDB is set up in an auto scaling mod

- E. During peak load, DynamoDB adjust capacity and through successfully.

Answer: A

NEW QUESTION 23

A company has a High Performance Computing (HPC) cluster in its on-premises data center which runs thousands of jobs in parallel for one week every month, processing petabytes of images. The images are stored on a network file server, which is replicated to a disaster recovery site. The on-premises data center has reached capacity and has started to spread the jobs out over the course of month in order to better utilize the cluster, causing a delay in the job completion.

The company has asked its Solutions Architect to design a cost-effective solution on AWS to scale beyond the current capacity of 5,000 cores and 10 petabytes of data. The solution must require the least amount of management overhead and maintain the current level of durability.

Which solution will meet the company’s requirements?

- A. Create a container in the Amazon Elastic Container Registry with the executable file for the jo

- B. Use Amazon ECS with Spot Fleet in Auto Scaling group

- C. Store the raw data in Amazon EBS SC1 volumes and write the output to Amazon S3.

- D. Create an Amazon EMR cluster with a combination of On Demand and Reserved Instance Task Nodes that will use Spark to pull data from Amazon S3. Use Amazon DynamoDB to maintain a list of jobs that need to be processed by the Amazon EMR cluster.

- E. Store the raw data in Amazon S3, and use AWS Batch with Managed Compute Environments to create Spot Fleet

- F. Submit jobs to AWS Batch Job Queues to pull down objects from Amazon S3 onto Amazon EBS volumes for temporary storage to be processed, and then write the results back to Amazon S3.

- G. Submit the list of jobs to be processed to an Amazon SQS to queue the jobs that need to be processed.Create a diversified cluster of Amazon EC2 worker instances using Spot Fleet that will automatically scale based on the queue dept

- H. Use Amazon EFS to store all the data sharing it across all instances in the cluster.

Answer: B

NEW QUESTION 24

A company uses Amazon S3 to store documents that may only be accessible to an Amazon EC2 instance in a certain virtual private cloud (VPC). The company fears that a malicious insider with access to this instance could also set up an EC2 instance in another VPC to access these documents.

Which of the following solutions will provide the required protection?

- A. Use an S3 VPC endpoint and an S3 bucket policy to limit access to this VPC endpoint.

- B. Use EC2 instance profiles and an S3 bucket policy to limit access to the role attached to the instance profile.

- C. Use S3 client-side encryption and store the key in the instance metadata.

- D. Use S3 server-side encryption and protect the key with an encryption context.

Answer: A

Explanation:

https://docs.aws.amazon.com/vpc/latest/userguide/vpce-gateway.html

Endpoint connections cannot be extended out of a VPC. Resources on the other side of a VPN connection, VPC peering connection, AWS Direct Connect connection, or ClassicLink connection in your VPC cannot use the endpoint to communicate with resources in the endpoint service.

NEW QUESTION 25

A large multinational company runs a timesheet application on AWS that is used by staff across the world. The application runs on Amazon EC2 instances in an Auto Scaling group behind an Elastic Load Balancing (ELB) load balancer, and stores in an Amazon RDS MySQL Multi-AZ database instance.

The CFO is concerned about the impact on the business if the application is not available. The application must not be down for more than two hours, but the solution must be as cost-effective as possible.

How should the Solutions Architect meet the CFO’s requirements while minimizing data loss?

- A. In another region, configure a read replica and create a copy of the infrastructur

- B. When an issue occurs, promote the read replica and configure as an Amazon RDS Multi-AZ database instanc

- C. Update the DNS to point to the other region’s ELB.

- D. Configure a 1-day window of 60-minute snapshots of the Amazon RDS Multi-AZ database instance.Create an AWS CloudFormation template of the application infrastructure that uses the latest snapsho

- E. When an issue occurs, use the AWS CloudFormation template to create the environment in another regio

- F. Update the DNS record to point to the other region’s ELB.

- G. Configure a 1-day window of 60-minute snapshots of the Amazon RDS Multi-AZ database instance which is copied to another regio

- H. Crate an AWS CloudFormation template of the application infrastructure that uses the latest copied snapsho

- I. When an issue occurs, use the AWS CloudFormation template to create the environment in another regio

- J. Update the DNS record to point to the other region’s ELB.

- K. Configure a read replica in another regio

- L. Create an AWS CloudFormation template of the application infrastructur

- M. When an issue occurs, promote the read replica and configure as an Amazon RDSMulti-AZ database instance and use the AWS CloudFormation template to create the environment in another region using the promoted Amazon RDS instanc

- N. Update the DNS record to point to the other region’s ELB.

Answer: D

NEW QUESTION 26

......

P.S. Easily pass SAP-C01 Exam with 179 Q&As Certshared Dumps & pdf Version, Welcome to Download the Newest Certshared SAP-C01 Dumps: https://www.certshared.com/exam/SAP-C01/ (179 New Questions)